Artificial Intelligence is transforming the world at an unprecedented pace. At the heart of this revolution lies a fascinating architecture known as Fully Connected Neural Networks, or FCNNs. These networks are pivotal in enabling machines to learn and make decisions with remarkable accuracy. From image recognition to natural language processing, FCNNs play a crucial role in various applications that impact our daily lives.

But what exactly makes these neural networks so powerful? In this article, we will explore the intricacies of FCNNs, their structure, training methods, challenges they face, and advancements shaping their future. Buckle up for an enlightening journey into how FCNN technology is reshaping AI as we know it!

The basic structure of a FCNN

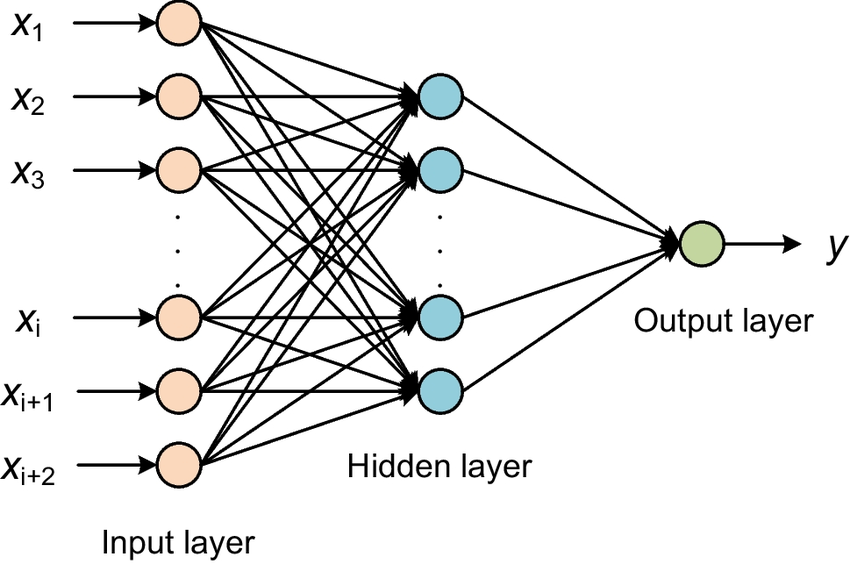

A Fully Connected Neural Network (FCNN) consists of layers that work together to process data. The architecture is typically divided into three main components: input, hidden, and output layers.

The input layer receives the raw data. Each neuron in this layer corresponds to a feature in the dataset. This direct connection allows FCNNs to handle complex inputs effectively.

Hidden layers follow the input layer and are where most computations occur. These layers can vary in number and size, depending on the complexity of the problem being addressed. Neurons within these layers activate based on weighted connections from previous neurons.

There’s the output layer. It produces predictions or classifications based on learned patterns from prior training stages. Each neuron here represents a possible outcome or category for classification tasks.

This structured approach enables FCNNs to model intricate relationships within data, making them powerful tools for various applications like image recognition and natural language processing.

Training and learning in FCNNs

Training a Fully Connected Neural Network (FCNN) is a fascinating process. At its core, it involves feeding data into the network and adjusting weights based on errors in predictions. This is where backpropagation comes into play.

Backpropagation calculates gradients of loss functions. It helps fine-tune the network’s parameters to minimize prediction error systematically. The learning rate plays an essential role here, determining how quickly or slowly the model adjusts.

As training progresses, layers learn increasingly complex features of the input data. Early layers might capture simple patterns while deeper ones identify intricate relationships.

Regularization techniques often accompany this process to prevent overfitting. Dropout and L2 regularization are common choices that promote generalization beyond training datasets.

This dynamic interaction between inputs and adjustments creates a robust framework for learning in FCNNs, making them powerful tools across various applications.

Common challenges and limitations of FCNNs

Fully Connected Neural Networks (FCNNs) are powerful, but they face several challenges. One significant issue is overfitting. FCNNs can become too specialized to the training data, leading to poor performance on unseen examples.

Another concern is computational cost. Training an FCNN requires substantial resources, including time and memory. This limitation can make them impractical for smaller projects or those with limited hardware capabilities.

Additionally, FCNNs struggle with high-dimensional data due to the curse of dimensionality. As input features increase, the complexity grows exponentially, making it harder for the network to generalize effectively.

Interpretability remains a challenge. Understanding how decisions are made within these networks can be complex and opaque. This lack of transparency raises concerns in critical applications like healthcare or finance where trust is paramount.

Advancements in FCNN technology

Recent advancements in FCNN technology have significantly enhanced their performance and efficiency. Researchers are now exploring new architectures that improve the depth and complexity of these networks, allowing them to handle more intricate tasks.

Innovations like dropout layers and batch normalization have emerged as key techniques. These methods help prevent overfitting while speeding up the training process. As a result, FCNNs can learn from larger datasets without losing accuracy.

Another exciting development is the integration of transfer learning. This approach allows FCNNs to leverage pre-trained models for specific applications, reducing computational costs and time spent on training from scratch.

Moreover, hardware improvements play a crucial role in advancing FCNN capabilities. Graphics Processing Units (GPUs) and specialized AI chips enable faster processing speeds, making it feasible to implement deep learning in real-time scenarios across various industries.

Real-world examples of FCNNs in action

FCNNs have made significant strides in various sectors, showcasing their versatility. One prominent application lies in image recognition. Tech giants like Google utilize FCNNs for object detection and facial recognition, enhancing user experiences across platforms.

In the healthcare industry, FCNNs are transforming diagnostics. They analyze medical images to identify anomalies such as tumors or fractures with remarkable accuracy. This capability not only aids doctors but also accelerates patient treatment processes.

Finance is another field benefiting from FCNN technology. Banks leverage these networks for credit scoring and fraud detection, streamlining decision-making while minimizing risk exposure.

Moreover, self-driving cars rely heavily on FCNNs to interpret data from sensors and cameras. By processing immense amounts of information quickly, these networks help vehicles navigate safely through complex environments.

These examples illustrate how FCNNs are paving the way for innovation across diverse industries. Their impact continues to grow as technology evolves.

Future possibilities for FCNNs in shaping AI

The future of Fully Connected Neural Networks (FCNNs) holds immense potential in shaping the landscape of artificial intelligence. As researchers continue to innovate, we may witness FCNNs playing a pivotal role in areas like natural language processing and computer vision.

One exciting possibility is their application in real-time decision-making systems. Imagine AI that not only understands context but also predicts outcomes based on previous data patterns—this could revolutionize industries from healthcare to finance.

Moreover, advancements in hardware technology might allow for deeper and more complex FCNN architectures. This could enable them to process vast amounts of information quickly and efficiently.

Additionally, integrating FCNNs with other neural network types can lead to hybrid models that leverage the strengths of various approaches. Such combinations could enhance performance across diverse tasks, making AI smarter and more adaptable than ever before.

Conclusion

Fully Connected Neural Networks (FCNNs) have revolutionized the landscape of artificial intelligence. Their ability to process vast amounts of data and extract meaningful patterns is unmatched. As technology continues to evolve, so too will the capabilities of FCNNs.

With advancements in computational power and algorithms, we can expect these networks to become even more efficient and effective. Real-world applications are expanding, from healthcare diagnostics to autonomous vehicles. The potential for FCNNs in various sectors seems limitless.

As researchers continue to address existing challenges—like overfitting and computational demands—the future looks promising. Innovations in training methods and network architecture could redefine what’s possible with AI.

The journey of fully connected neural networks is just beginning. Their influence on shaping AI cannot be understated, paving the way for smarter solutions that enhance our daily lives while pushing boundaries previously thought insurmountable.