For digital marketers, content creators, and filmmakers, the ability to perform perfect facial swapping has evolved from a simple novelty to a powerful capability. Advanced artificial intelligence algorithms are no longer just tools for humorous emojis, but are now reshaping the way we tell visual stories, providing unprecedented flexibility for video and image editing. Whether you want to revive old footage, create localized marketing campaigns, or just entertain audiences, understanding the subtle differences of this technology is crucial. In this comprehensive guide, we will explore the mechanisms behind high-quality face swap, the key differences between static and dynamic editing, and a set of artificial intelligence tools accompanying this cutting-edge technology – from background removal to speech cloning. We will also discuss how platforms like faceswap-ai.io democratize access to these professional level features.

Beyond the Selfie: The Professional Potential of Image Face Swap

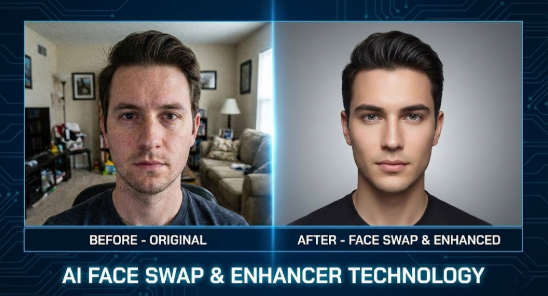

When we talk about the basis of AI manipulation, we must start with image face swap. Although many users are familiar with the basic mobile app that overlays one face on another, the professional field has rapidly turned to high fidelity and generate GAN. High quality photo face changing is not only about cutting and pasting features; It analyzes lighting conditions, skin texture and facial geometry to ensure seamless integration, which is almost impossible to detect with the naked eye. This ability is changing industries such as e-commerce and fashion. For example, brands can now use a single model, and through image face swap technology, adjust the model’s race or appearance to adapt to different global markets, without having to arrange multiple shooting logistics nightmares.

However, a perfect exchange usually requires more than just facial manipulation. To create truly professional works, support tools are usually needed. Usually, the source image may be affected by low resolution or noise. This is where the image intensifier becomes a key part of the workflow. By improving the quality of basic photos before or after exchange, creators can ensure that the final output remains clear and printable. In addition, the environment around the subject is as important as the face itself. Messy or irrelevant background will distract the focus. With the background remover, the editor can immediately isolate the theme and place it in the background of the enhanced image narrative.

The synergy between these tools creates a strong ecosystem for digital artists. Imagine taking a retro granular photo, using a watermark remover to clean up old time stamps or scratches, improving the resolution, and then exchanging faces to create a modern tribute or creative artwork. The facial expression change tool further adds a layer of depth, allowing you to adjust a patient model into a smiling, easy-going image, so as to change the emotional resonance of the whole image. As we enter 2,025, the boundary between photography and AI assisted creation continues to blur, providing unlimited possibilities for those who master these static tools.

The Dynamics of Video Face Swap: Consistency in Motion

The transition from static image to video introduces an exponential level of complexity, making video face swap one of the most challenging but valuable frontier areas in AI content creation. Unlike a single photo, the video is composed of 24 to 60 frames per second, and each frame needs to be precisely aligned. Video face swap must track the head movement, illumination change and occlusion of the target in real time (such as the hand moving in front of the face). If AI can’t maintain consistency for even a second, the hallucination will be broken, resulting in the “terror Valley” effect. That’s why advanced algorithms, such as those on faceswap-ai.io, are crucial for creators who aim to get cinema level results, not just fast social media clips.

One of the most exciting applications of this technology is video character replacement. Independent film producers and YouTube users can now play themselves in roles that were impossible before, or replace the face of the stuntman with the face of the protagonist with the face of the stuntman with the minimum budget in post production. However, due to the large amount of processing involved, the original output of video exchange sometimes leads to a slight loss of fidelity. In order to deal with this situation, professionals usually use video intensifiers. This tool can sharpen the edge and restore the details that may be smoothed in the exchange process. In addition, the emergence of nano-banna Pro Model and similar high-level architecture significantly improves the speed and accuracy of these renderings.

In addition, the effectiveness of video manipulation is not limited to narrative film production. In the field of social media, GIF face swap has become a viral marketing tool. Brands can insert their ambassadors into popular memes or popular movie clips to immediately attract young audiences. But technical barriers still exist; For example, if the original shot has distracting elements, then the video background remover becomes indispensable. This allows creators to immediately move their theme from the living room to the newsroom or fantasy world. The combination of video face swap technology and environment manipulation tools has paved the way for the new era of “virtual production”. In this era, the physical limitations of shooting locations no longer determine the final visual story.

Elevating Quality: Upscaling, Cleaning, and Advanced Restoration

The realization of realistic face swap is only half of the success; The second part is the strict improvement of visual assets. Whether you are dealing with photo face swap or complex video projects, the artifacts left by digital manipulation may be signs of AI intervention. In order to maintain authority and professionalism, creators must use a set of recovery tools. One of the common problems in traditional lenses or low-quality source materials is pixelation. Here, video upgrading is not just a luxury; This is necessary. By using deep learning to predict and inject missing pixels, the upgrade can convert the 720p lens to clear 4K content, ensuring that the exchanged face matches the high-resolution of the modern display.

Another common obstacle to reuse content for face swap is the presence of unwanted coverage. You may find the perfect source video, but it’s broken by logos, timestamps or subtitles. A complex watermark remover can intelligently fill these occluded areas by analyzing the surrounding pixels, and effectively “repair” videos or images. This is especially useful when creating GIF face swap content from watermarked materials or broadcast clips. The goal is to make the final product look like it was captured locally without any interference.

In addition, the scope of improvement includes style adjustment. Sometimes, the lighting of the source surface does not match the target environment. The advanced video intensifier tool can now dynamically adjust color grading and lighting dynamics. We also saw the integration of next generation models like VEO 3.1, which is expected to better understand 3D depth and texture. This ensures that when you swap faces, the skin will accurately reflect light based on the geometry of the scene. By combining face swap with these high-end recovery and cleaning tools, creators can create “deep forgery” style content that can’t be distinguished from reality, which is suitable for high-risk commercial works, educational replay or privacy protection. In these works, the identity of the subject needs to be covered, but it still has human feelings and expressiveness.

Beyond Visuals: Audio Synchronization and The Future of Digital Identity

At the end of the day, a convincing digital character needs more than just visual matching; It requires auditory synchronization. For the audience, the most harsh experience is to see a perfect video face swap, accompanied by a voice that does not match the speaker, or a lip action that is not synchronized with the audio track. This is the intersection of voice cloning technology and visual AI. By cloning the voice of the target, the creator can generate a new sound track that sounds exactly like the person whose face is replaced. However, the visual components have to keep up. Lip sync technology has developed to the point where it can modify the actor’s mouth movements in the video to perfectly match the new track, regardless of the original language or the words said.

This holistic approach can achieve incredible applications. Imagine that you can dub a movie into five different languages, but you don’t just change the audio, you use lip sync and face swap, so that the actors seem to speak fluent French, Spanish or Japanese. This has broken the global communication barrier. In addition, tools such as facial expression changers can modify the emotional tone of speakers to match the new audio environment, ensuring that serious statements look serious and jokes with a smile.

As we embrace these tools, platforms like faceswap-ai.io are becoming the central hub for the integration of these different technologies. From the initial photo face swap to the final video upgrade and embellishment, to the complex voice clone integration, the workflow is becoming streamlined. Although the creative potential is unlimited, it is also important to use these powerful tools responsibly, including the video background remover and the video character replacement function. With the continuous development of VEO 3.1 and other models, the difference between capturing reality and generating reality will disappear. For creators, the message is clear: mastering a full range of AI tools – vision, hearing and resilience – is the key to leading the digital landscape in the future.f